On March 24, Global Development practice co-lead Aleta Starosta and Research and Evaluation Specialist Miriam Counterman, along with Cloudburst consultant Robert Gerstein and a Social Impact colleague, presented the results of two research projects during a forum entitled “Amplifying Evidence in DRG Programming” at USAID’s Democracy, Human Rights, and Governance (DRG) Center’s annual Learning Forum. The presentations discussed the results of USAID’s Evidence and Learning (E&L) Team’s Utilization Measurement Analysis (UMA) and the DRG Center’s Mission Use of Evidence (MUSE) study.

The purpose of the Learning Forum session, which was attended by more than 100 USAID staff and partners, was to discuss the ways that evidence is currently used and not used, barriers to its use, and factors that make research products more useful for project design and implementation. In 2011, USAID implemented a policy on evaluation, formalizing its commitment to high-quality evaluations that generate evidence to inform decisions, promote learning, and ensure accountability. A substantial increase in the number of evaluations and assessments has followed, reaching nearly 250 in 2020.

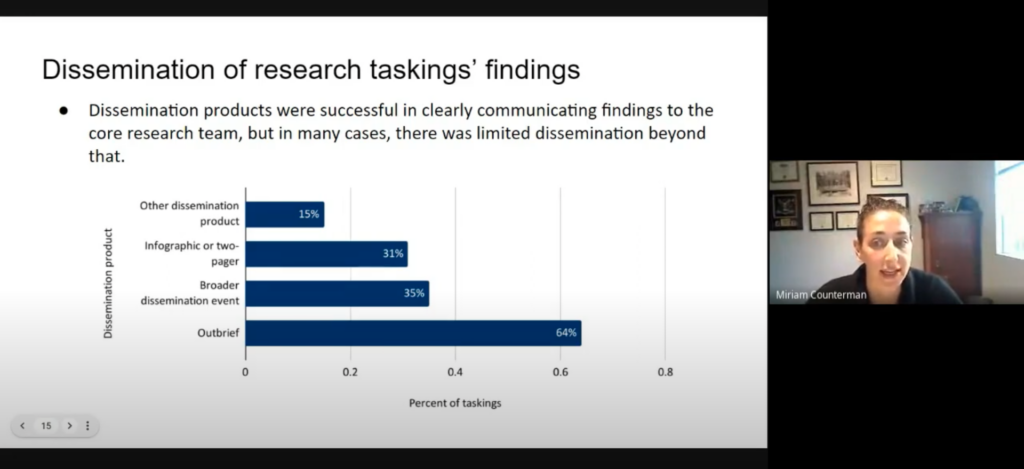

For the E&L UMA, the Cloudburst team interviewed primary points of contact for 22 of our Learning, Evidence, and Research (LER) II contract taskings, exploring how they used findings and recommendations from evaluations or assessments that they commissioned. The interviews indicated generally high satisfaction with learning products; interviewees report that they use research findings to inform general learning, improve activity design and implementation, and develop strategy. However, the findings also indicated missed opportunities in utilizing findings. Major barriers to utilization include recommendations that are not practical or actionable, budget and time limitations, turnover of staff within USAID, and a lack of buy-in or support for evidence-based policymaking.

The findings from both the UMA and MUSE suggest that USAID staff need more time and resources to be able to effectively apply research in their work. Currently, other priorities often overshadow this type of research utilization and, where further utilization is not required, it may be set aside. The Q&A portion of the session uncovered a need to make research easily accessible and synthesize findings, making it easier to use. Currently, USAID’s Development Experience Clearinghouse (DEC) is difficult to use and limited in application. In addition, a user may not be able to adapt findings from one context to another, further limiting the utility of those findings. Synthesizing multiple reports on a single topic, for example, could inform best practices more broadly within a subsector. Finally, the findings and discussion revealed some disagreement over whether USAID staff need additional training on how to use research findings. Some respondents felt that they knew how, but needed the time to do so, while others wished for additional training on how to interpret and apply research findings.

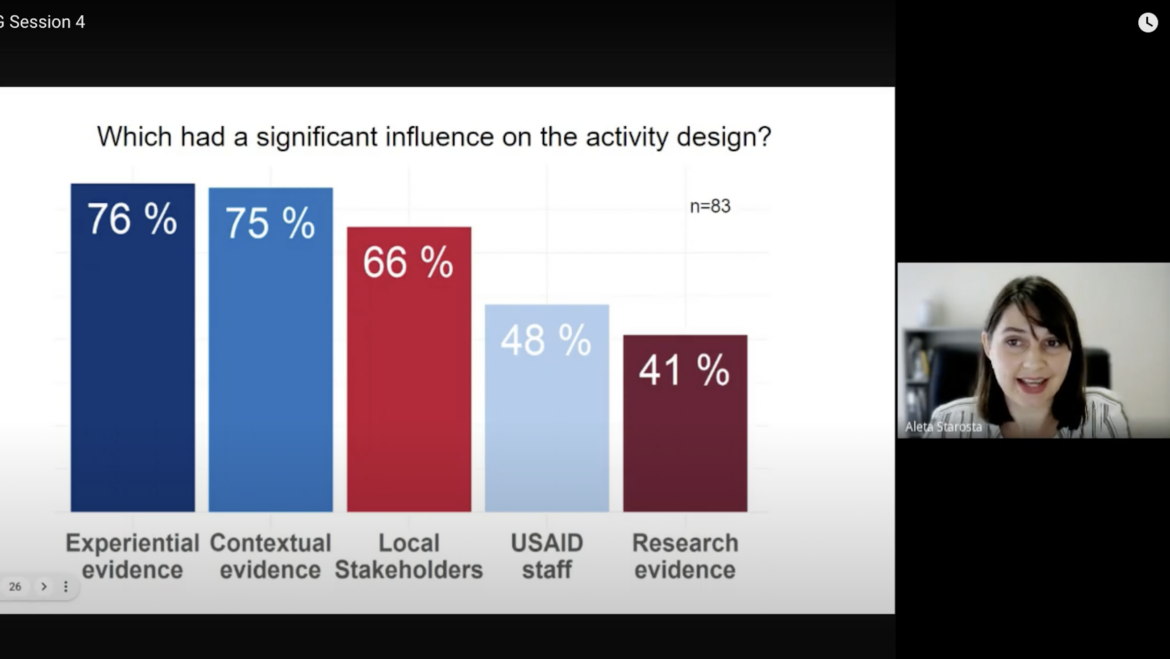

For the ongoing MUSE study, Cloudburst presented preliminary findings based on our initial interviews with USAID Mission staff and a brief survey distributed to DRG Center personnel. While the UMA looked at how specific commissioned research products were used, the MUSE looks at how evidence more broadly is used to inform activity design. The initial findings indicate that designers are more likely to use experiential learning (such as technical experts, past experience, lessons learned, etc.) and contextual evidence (such as local data sources and assessments), in addition to the views of local stakeholders, than they are to use rigorous research evidence. Similar to the UMA results, the most common barrier identified was insufficient time and resources among staff. Respondents also reported that they found this evidence difficult to locate and apply.

The session underlined the appreciation for and potential of the research that Cloudburst and other learning partners produce for USAID. However, it is also clear that more work can be done to ensure that research is utilized to its full potential. A close collaboration between researchers and USAID staff is key to ensuring that recommendations are both useful and used.